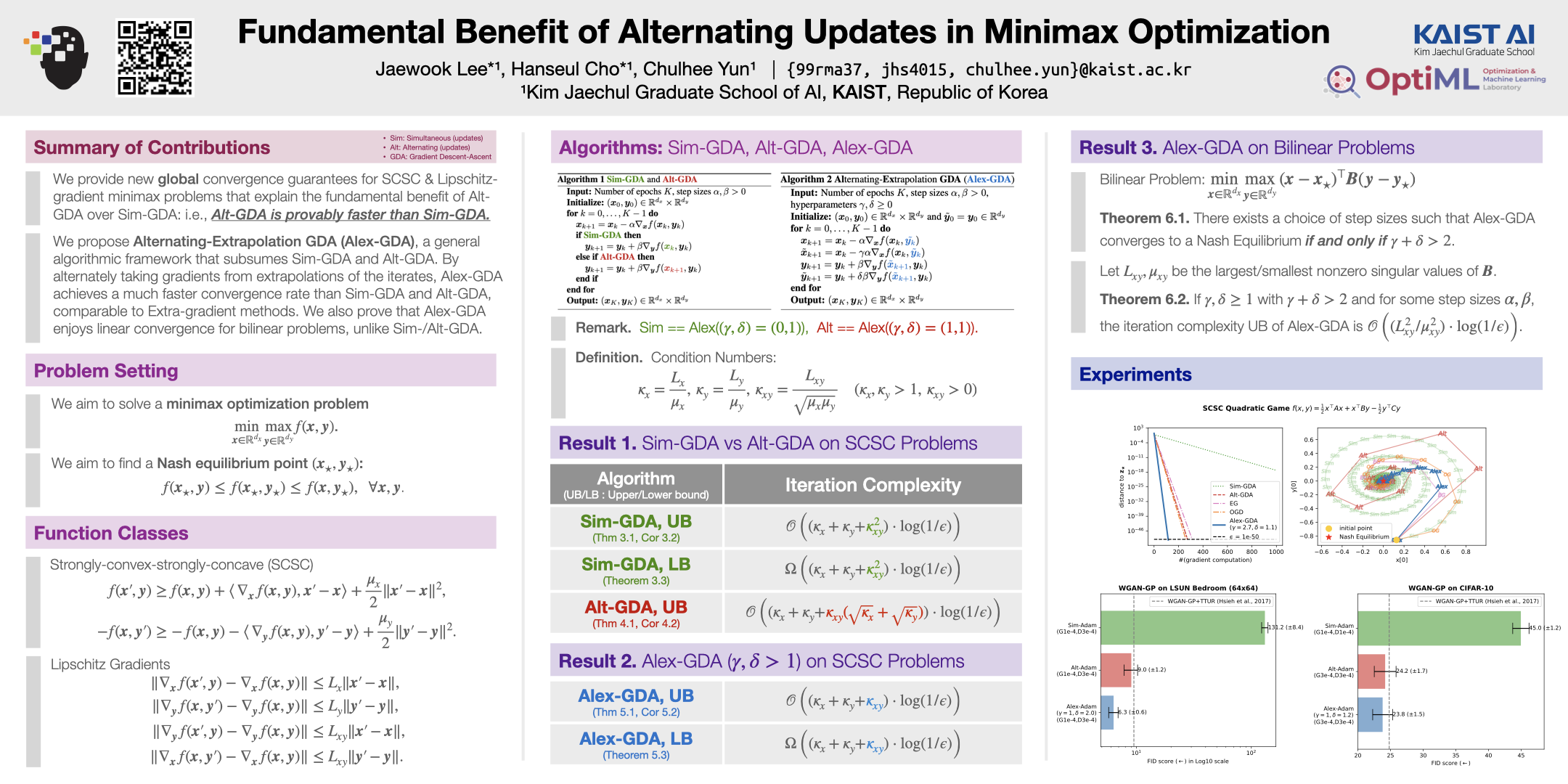

Fundamental Benefit of Alternating Updates in Minimax Optimization

👥 Jaewook Lee*, Hanseul Cho*, and Chulhee Yun

🗓 📰 ICML 2024 (Short version at ICLR 2024 Workshop on Bridging the Gap Between Practice and Theory in Deep Learning (BGPT))

Poster

Abstract

The Gradient Descent-Ascent (GDA) algorithm, designed to solve minimax optimization problems, takes the descent and ascent steps either simultaneously (Sim-GDA) or alternately (Alt-GDA). While Alt-GDA is commonly observed to converge faster, the performance gap between the two is not yet well understood theoretically, especially in terms of global convergence rates. To address this theory-practice gap, we present fine-grained convergence analyses of both algorithms for strongly-convex-strongly-concave and Lipschitz-gradient objectives. Our new iteration complexity upper bound of Alt-GDA is strictly smaller than the lower bound of Sim-GDA; i.e., Alt-GDA is provably faster. Moreover, we propose Alternating-Extrapolation GDA (Alex-GDA), a general algorithmic framework that subsumes Sim-GDA and Alt-GDA, for which the main idea is to alternately take gradients from extrapolations of the iterates. We show that Alex-GDA satisfies a smaller iteration complexity bound, identical to that of the Extra-gradient method, while requiring less gradient computations. We also prove that Alex-GDA enjoys linear convergence for bilinear problems, for which both Sim-GDA and Alt-GDA fail to converge at all.